The configuration file is used to identify the sensors and cameras to use on your system. You can have multiple sensors and cameras physically connected to the board, but your system uses only the ones identified in this file.

You can configure as few as one camera or sensor. For testing purposes, you can simulate a camera or sensor by specifying a file. The file can contain sensor information, such as lidar data, or compressed or uncompressed video for playback.

In this release, the code that supports recording and playing video in MP4, UCV, or MOV format is contained in a library, libcamapi_video.so.1, that's separate from the Camera library, libcamapi.so.1. If you plan to record or play video in these formats, you must include this first library in your target image. For information on how to generate a target image, see the Building Embedded Systems guide in the QNX Neutrino documentation.

The reference images shipped with Sensor Framework include the libcamapi_video.so.1 library.

Global settings

Global settings are set using the SENSOR_GLOBAL section of the configuration file. This section is enclosed by begin SENSOR_GLOBAL and end SENSOR_GLOBAL. There can only be one section of this kind in the file. Any parameters defined here are not specific to any sensor but are instead “global” to the entire system. The following is a valid configuration of SENSOR_GLOBAL:

begin SENSOR_GLOBAL

external_platform_library_path:libsensor_platform_nxp.so

external_platform_library_variant:PLATFORM_VARIANT_IMX8

end SENSOR_GLOBAL

Parameters:

- external_platform_library_path

- The path to the external platform library to use; which library to use depends on the platform used.

The values associated with each platform are:

- NXP iMX8: libsensor_platform_nxp.so

- x86_64 (VMware or generic Intel x86_64 platform): libsensor_platform_intel.so

- Renesas V3H: libsensor_platform_renesas.so

- external_platform_library_variant

- A string containing the platform variant; the value here will vary based on the platform used.

The values associated with each platform are:

- NXP iMX8: PLATFORM_VARIANT_IMX8

- x86_64 (VMware or generic Intel x86_64 platform): PLATFORM_VARIANT_GENERIC

- Renesas V3H: PLATFORM_VARIANT_V3H

Specific cameras and sensors

The configuration for a camera or sensor is specified in a section enclosed by begin SENSUNIT and end SENSUNIT, where SENSUNIT is an enumerated value from sensor_unit_t such as SENSOR_UNIT_1. For more information about these values, see the “sensor_unit_t” section in the Sensor Library chapter of the Sensor Library Developer's Guide. If you are using the Camera library API, you must map the enumerated value from the camera_unit_t data type to the corresponding value from sensor_unit_t. For example, for CAMERA_UNIT_1, you would map it to SENSOR_UNIT_1.

- one sensor must be named SENSOR_UNIT_1

- each name can appear only once in the file

- there can be no gaps between the numeric part of the identifiers you use

begin SENSOR_UNIT_3

type = file_camera

name = left

address = /accounts/1000/shared/videos/frontviewvideo1.mp4

default_video_format = rgb8888

default_video_resolution = 640, 480

end SENSOR_UNIT_3

begin SENSOR_UNIT_1

type = file_data

name = vlp-16

address = /accounts/1000/shared/videos/capture_data.mp4

playback_group = 1

direction = 0,0,0

position = 1000,0,1100

end SENSOR_UNIT_1

begin SENSOR_UNIT_2

type = radar

name = front

direction = 0,0,0

position = 3760,0,0

address = /dev/usb/io-usb-otg, -1, -1, -1, -1, 0, delphi_esr

packet_size = 64

data_format = SENSOR_FORMAT_RADAR_POLAR

end SENSOR_UNIT_2

Parameters:

- type

- (Required) The type of sensor or camera. The supported values are:

- usb_camera — Indicates that the camera is connected to the system via USB. Cameras must be compliant to the USB Video Class (UVC) or USB3 Vision standard.

- sensor_camera — Indicates that the camera sensor is directly connected to a native video port on the board, such as CSI2 or parallel port.

- ip_camera — Indicates that the camera is connected to the system via Ethernet.

- file_camera — Simulates a camera connected to the board. The video file that's created comes from recorded data from the Camera or Sensor library. This is useful for testing purposes during the development phase of your project. The specified video file plays in a loop. The supported formats are uncompressed (RAW), LZ4, GZIP-compressed format (GZ), MOV, UCV (UnCompressed Video), and MP4. UCV is a propriety format. The recorded video can be uncompressed video in MOV format, UCV file format, or H.264-encoded video in MP4 format.

- file_data — Simulates a sensor (non-camera) connected to the board. The file that's provided is recorded data from a sensor (e.g., GPS, radar, lidar) using the Sensor library; the file loops continuously when played. The data from the sensor can be an uncompressed format (RAW) or lossless compression formats, such as a GZIP (GZ) or an LZ4 file. This parameter is useful for testing purposes.

- radar — Indicates that radar is connected to the board.

- lidar — Indicates that lidar is connected to the board.

- gps — Indicates that a GPS is connected to the board.

- imu — Indicates that an IMU is connected to the board.

- external_camera — Indicates that an external camera is being used. Specify the library (external camera driver) that you provide to support this camera, in the address parameter.

- external_sensor — Indicates that an external sensor is being used. Specify the library (external sensor driver) that you provide to support this sensor, in the address parameter.

- address

- (Required) This parameter is used to refine the identity of the sensor based on its type,

especially when you have more than one sensor of the same type connected to your system.

For example, if you have more than one USB camera connected, this parameter

can help to identify which one is currently in use.

Based on the sensor configured for type, here are the formats you should use:

Type Format for address usb_camera driver_path, bus, device, vendorID, deviceID This format identifies a USB camera when you have more than one USB camera connected to your system. You can use a wild card (-1) to accept any USB camera found on the system. If you have only one such camera connected, using a wild card value of -1 for all fields works. However, if you have more than one camera, you must specify non-wild card values for the bus/device or vendorID/deviceID parameter pair. For example, if you have more than one camera of the same make or model, you must specify bus/device because specifying vendorID/deviceID doesn't uniquely identify the camera.

- The required driver_path parameter specifies the path of the driver that's used for cameras on the system (e.g., /dev/usb/io-usb-otg).

- The bus and device values are unique and depend on which port you use to connect the cable on your hardware. These values are useful to describe the position of the device on the bus. It's up to you to determine how this is relevant to your system.

- vendorID and deviceID specify the device's vendor and identifier. The numbers are USB-standard identifiers.

sensor_camera cameraId, input This format identifies a sensor camera when you have more than one sensor camera connected to the system. The format is as follows:- cameraId — An identifier string. For instance, you can use the value of rdacm21_max9286 for an RDACM21 camera that's connected to a Maxim 9286 deserializer.

- input — A number that specifies the camera to use for deserializer boards. Set this to 0 for the first camera, 1 for the second, etc.

file_camera /path/to/file/video.mp4 This format identifies a RAW, LZ4, GZ, MP4 (H.264 encoded video), UCV, or MOV (uncompressed video) file. The prerecorded file can be created using APIs from the Camera or Sensor library. file_data /path/to/file/data.lz4 This format identifies a prerecorded file containing sensor data, which can be raw binary (RAW). It can be also encoded (lossless compression) as a GZIP (GZ) or LZ4 file. imu driver_path, bus, device, vendorID, deviceID, model or

driver_path, modelThis format identifies an inertial measure unit (IMU) when you have more than one IMU connected to the system. You can use a wild card (-1) to accept any IMU found on the system. If you have only one IMU connected, using a wild card value of -1 for all fields works. However, if you have more than one IMU connected, you must specify non-wild card values for the bus/device or vendorID/deviceID parameter pair. For example, if you have more than one IMU of the same make or model, you must specify bus/device because specifying vendorID/deviceID doesn't uniquely identify the IMU.

- The required driver_path parameter specifies the path of the driver that's used for IMUs on the system (e.g., /dev/serusb1).

- The bus and device values are unique and depend on which port you use to connect the cable on your hardware. These values are hardware specific and describe the device's position on the bus.

- vendorID and deviceID specify the device's vendor and identifier.

- The model specifies the make and model of the sensor (e.g., xsens_mti-g-710, novatel_oem6).

ip_camera ip_address, type_of_camera, [serial_num] This format identifies an IP camera connected to your system. The ip_address segment represents the camera's IP address. If you don't know this address, use 0.0.0.0 to use auto-discovery; in this case, the serial_num segment isn't required. When you use a valid IP address, you don't need to specify a value in serial_num.

The type_of_camera segment specifies the camera type, which can be onvif for an ONVIF-compliant camera or gige_vision for a GigE vision-compliant camera.

The serial_num is an optional segment that represents the camera's serial number. When you use auto-discovery, it matches the specified serial number. If this segment is left blank when using auto-discovery, the first IP camera that's found on the system is used, which is typical when you have one camera connected to your system.

lidar ip_address, ip_based_lidar_model or

serial_driver_path, device_address, serial_based_lidar_modelThis format identifies the lidar sensor when you have more than one lidar sensor connected to your system. To configure an IP-based lidar, the ip_address segment must be a valid IP address because it broadcasts the data over UDP.

For the ip_based_lidar_model segment, you can use the string velodyne_vlp-16 or velodyne_vlp-16-high-res to refer to the IP-based lidar from Velodyne. You must use a string to match the model that you have connected to your target.

To configure a serial-based lidar, the serial_driver_path segment is specified as /dev/serX (where X is the port and depends on your configuration). For device_address, you must specify the device address assigned to the lidar. For lidar_model, you can use leddartech_vu8 to specify a serial-based lidar from Leddartech.

radar driver_path, bus, device, vendorID, deviceID, channelID, model This format identifies the radar sensor when you have more than one radar sensor connected to your system. You can use a wild card (-1) to accept any radar sensor connected to the system. If you have only one such sensor connected, using a wild card value of -1 for all fields works. However, if you have more than one sensor, you must specify non-wild card values for at least the bus/device parameter pair or the deviceID parameter. For example, if you have more than one radar sensor of the same make or model, you must specify bus/device because specifying deviceID doesn't uniquely identify the sensor.

- The required driver_path parameter specifies the path of the driver that's used for sensors on the system (e.g., /dev/usb/io-usb-otg).

- The bus and device values are unique and depend on where you connect the USB cable on your board. The values are useful to describe the position of the radar sensor on the USB bus. It's up to you to determine how this is relevant to your system.

- The channelID identifies the channel on the USB-to-CAN adapter to which the sensor is connected. For example, in the case of the Kvaser USB-to-CAN adapter, channel 0 or 1 can be specified.

- The vendorID and deviceID identifies the Kvaser cable.

- The model specifies the make and model of the sensor (e.g., delphi_srr2_right, delphi_srr2_left).

gps driver_path, bus, device, vendorID, deviceID, model or

driver_path, modelThis format identifies the GPS sensor when you have more than one GPS sensor on your system. You can use a wild card (-1) to accept any GPS unit found on the system. If you have only one such unit connected, using a wild card value of -1 for all fields works. However, if you have more than one unit connected, you must specify non-wild card values for the bus/device or vendorID/deviceID parameter pair. For example, if you have more than one sensor of the same make or model, you must specify bus/device because specifying vendorID/deviceID doesn't uniquely identify the sensor.

- The required driver_path parameter specifies the path of the driver that's used for GPS units on the system (e.g., /dev/usb/io-usb-otg, /dev/serusb1).

- The bus and device values are unique and depend on which port you use to connect the cable on your hardware. These values are useful to describe the position of the device on the bus. It's up to you to determine how this is relevant to your system.

- The vendorID and deviceID specify the device's vendor and identifier.

- The model specifies the make and model of the sensor (e.g., xsens_mti-g-710, novatel_oem6).

external_camera driver_path, input This format identifies the user-provided library that's used for external cameras on the system.

The driver_path specifies the path of this library. The library must implement the functions defined in external_camera_api.h. For more information, see “Using external camera drivers” in the Camera Developer's Guide.

The input specifies the value that the Sensor service passes to open_external_camera() as the input parameter to identify the camera to use when your driver supports multiple cameras.

external_sensor driver_path This format identifies the user-provided library that's used for external sensors on the system.

The driver_path specifies the path of this library. The library must implement the functions defined in external_sensor_api.h. For more information, see “Using external sensor drivers” in the Sensor Developer's Guide.

- coordinate_system

- (Optional for sensors whose type is gps, imu,

lidar, or radar)

This parameter indicates whether the sensor data you receive through the Sensor library is

transformed to your reference coordinate system based on each sensor's

positioning data. Valid values are:

- car — Indicates that the sensor data is transformed using position and direction

- sensor — (Default) Indicates that no transformation is applied

The coordinate_system parameter isn't applicable to cameras.

- csi_port

- (Required only for platforms with multiple CSI2 ports) The CSI2 port that the camera is connected to. For instance, if the board supports connecting the camera to one of two such ports, you must specify which one with csi_port = 0 or csi_port = 1.

- data_format

- (Required for lidar, radar, GPS, or IMU systems) Based on the sensor configured for type, here are the formats you should use:

Type Format lidar - SENSOR_FORMAT_LIDAR_POLAR — the data is in polar coordinates

- SENSOR_FORMAT_LIDAR_SPHERICAL — the data is in spherical coordinates

- SENSOR_FORMAT_POINT_CLOUD — the data is in point cloud format (cartesian coordinates, which are X, Y, and Z coordinates)

radar - SENSOR_FORMAT_RADAR_POLAR — the data is in polar coordinates

- SENSOR_FORMAT_RADAR_SPHERICAL — the data is in spherical coordinates

gps, imu - SENSOR_FORMAT_GPS — GPS

- SENSOR_FORMAT_IMU — inertial measure unit (IMU)

- default_video_format

- (Optional for cameras) The default video format the camera starts up with.

This is useful when you want to change the system-provided video format

for the camera and don't want to use the Camera API to do so.

You must know the video formats supported by your camera. Based on

the supported formats, you can use these corresponding values to

configure the default video format:

- nv12 — NV12 formatted data.

- rgb8888 — 32-bit ARGB data.

- rgb888 — 24-bit RGB data.

- gray8 — 8-bit gray-scale image data.

- bayer — 10-bit Bayer frame type.

- cbycry — YCbCr 4:2:2 packed frame type.

- rgb565 — 16-bit RGB data (5-bit red component, 6-bit green component, 5-bit blue component).

- ycbcr420p — 4:2:0 YCbCr format where Y, Cb and Cr are stored in separate planes.

- ycbycr — YCbCr 4:2:2 packed frame type where the pixel order is Y, Cb, Y, Cr.

- ycrycb — YCbCr 4:2:2 packed frame type where the pixel order is Y, Cr, Y, Cb.

- crycby — YCbCr 4:2:2 packed frame type where the pixel order is Cr, Y, Cb, Y.

- bayer14_rggb_padlo16 — 14-bit Bayer data in a 16-bit buffer.

- default_video_framerate

- (Optional; for cameras and file cameras only) The default video frame rate the camera starts up with. This is useful when you want to change the system-provided frame rate for the camera and don't want to use the Camera API to do so. You can provide a rate using a numeric value, such as 60 to indicate 60 frames per second (fps). You must know the frame rates that are supported by your camera.

- default_video_resolution

- (Optional; for cameras and file cameras only) The default video resolution to show on the display. This is useful when you want to change the resolution that's provided for the camera and you don't want to use the Camera API to do so. For example, you can provide a value of 640,480 to indicate a 640 x 480 resolution. You must know the resolutions that are supported by your camera.

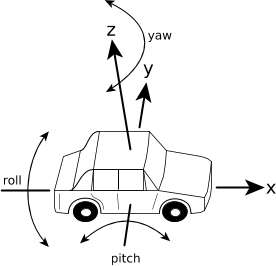

- direction

-

(Optional) A comma-separated list of angles (in degrees counter-clockwise) that indicates the

orientation of the sensor. List the angles in the order of yaw, pitch, and roll

as you would in a right-handed, three-dimensional cartesian coordinate system where:

- yaw is the angle about the z-axis

- pitch is the angle about the y-axis

- roll is the angle about the x-axis (direction of travel)

-

Figure 1. Direction of travel.If the x-axis isn't the direction of travel in your reference coordinate system, then you must adjust the values of the angles for direction accordingly. The coordinate system that you use to specify the direction must be the same as that with which you specify the position.

- f_number

- The aperture of the camera, expressed as the ratio of the focal length to the diameter of the entrance pupil of the lens. This is used for information purposes only, and can be retrieved via the CAMERA_PHYSPROP_APERTURE property from the Camera API.

- flicker_cancel_mode

- (Optional for cameras only) This parameter is for cameras that support 50 or 60 Hz flicker-cancellation algorithms. The value can be one of the following:

- off — (Default) Flicker-cancellation algorithm is off.

- on_auto — Algorithm is on and detecting whether 50 or 60 Hz frequency is used.

- on_50Hz — Algorithm is on and filtering out 50 Hz flicker.

- on_60Hz — Algorithm is on and filtering out 60 Hz flicker.

- filter_profile

- (Required for GPS systems only) The GPS filter to apply.

For an XSens MTi 100-series GPS, these are the values

you can use:

- general — The default setting, which makes a few assumptions about movements. Yaw is referenced by comparing GNSS accelerators with on-board accelerators.

- general_nobaro — Similar to the general setting, but it doesn't use the barometer for height estimation.

- general_mag — Similar to the general setting, but the yaw is based on magnetic heading, together with comparison of GNSS acceleration and the accelerometers.

- automotive — Yaw is first determined using the GNSS. If the GNSS is lost, yaw is determined using the velocity estimation algorithm for the first 60 seconds and then determined using only gyroscope integration. If a GNSS outage occurs regularly or GNSS availability is poor (e.g., in urban canyons), use high_performance_edr instead. The automotive filter uses holonomic constraints and requires that the GPS is mounted according to the settings specified in the XSens User Manual.

- high_performance_edr — This filter is specially designed for ground-based navigation applications where deteriorated GNSS conditions and GNSS outages occur regularly. This filter doesn't use holonomic constraints, therefore it doesn't require GPS mounting considerations. It's ideal for slow-moving ground vehicles.

For more information, see the XSens MTi 100-series GPS documentation on the XSens website (https://www.xsens.com/).

- horizontal_fov

- (Optional) The horizontal field of view of the sensor. This value is specified in degrees. If two fields of view are supported, you can specify both values delimited by a comma. The horizontal axis is defined as the axis that runs from left to right, lengthwise across the sensor.

- i2c_addr

- (Optional; for sensor cameras only) This parameter specifies the 7-bit I2C address used to communicate with the sensor camera. If this parameter isn't specified, the default value for that sensor camera is used.

- i2c_path

- (Optional; for sensor cameras only) This parameter specifies the path of the I2C driver used to communicate with this camera. For example, /dev/i2c1.

- lidar_fov

- (Optional) The horizontal rotation (azimuth, in degrees) of interest from the

lidar sensor.

For Velodyne lidar sensors, lidar_fov specifies the horizontal rotation (i.e., the difference between FOV End and FOV Start) that you configured by using the Velodyne webserver user interface. Valid rotations are in the range [1..360].

For Leddartech lidar sensors, lidar_fov specifies the horizontal rotation that's supported by the sensor. For example, Leddartech's Vu8 is available in different configurations that can support 20, 48, or 100.

- name

- (Required) A unique string to identify the camera.

- num_user_buffers

- (Optional) The number of buffers required for processing. If this value isn't specified, the default is 2.

- packet_size

- (Required for GPS, IMU, lidar, or radar systems) The number of data entries in each packet, where a packet is the data in the sensor_buffer_t structure. The Velodyne lidar sensor has rules about the allowed values for packet_size. See the relevant example, “Example: Sensor configuration file for lidar”, for details.

- playback_group

- (Required for file cameras only) This parameter permits synchronized playback of the specified video file. You can configure multiple cameras to be part of the same group. A value of 0 indicates that the camera isn't part of a group, a value of 1 indicates that the camera is part of group 1, and so on. Up to four groups are supported.

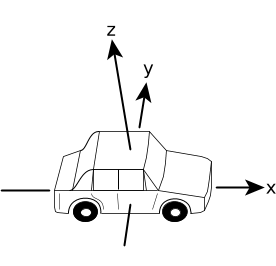

- position

- (Optional) A comma-separated list of distances (in millimeters) from an origin of your choice—the

center of the vehicle's rear axle is commonly used—to the sensor's installation point. Specify

the distances in the order of X, Y, and Z as you would in a right-handed, three-dimensional

cartesian coordinate system where:

- X is the distance on the x-axis (direction of travel)

- Y is the distance on the y-axis

- Z is the distance on the z-axis

Figure 2. Direction of travel.If the x-axis isn't the direction of travel in your reference coordinate system, then adjust the values of the distances for position accordingly. The coordinate system that you use to specify the position must be the same as that with which you specify the direction.

- password

- (Required for IP cameras only) The password to access the IP camera. This parameter also requires that you use the username parameter.

- range_fov

- (Optional) The sensor range, in millimeters. If two fields of view are supported, you can specify both values delimited by a comma.

- reference_clock

- (Optional) The type of clock used to generate the sensor timestamps.

The valid values are:

- external — Dynamically load and use the external (user-provided) clock library that's specified by reference_clock_library to generate sensor timestamps. If you specify external as your reference clock, you must set this other parameter.

- monotonic — Use CLOCK_MONOTONIC. This is the default setting. The Sensor service generates the timestamps for the sensors based on its own monotonic clock.

- ptp — Use the Precision Time Protocol (PTP) daemon to synchronize sensor timestamps when sensors are across multiple targets.

- real_time — Use CLOCK_REALTIME. The Sensor service generates the timestamps for the sensors based on the “current time of day” clock.

- reference_clock_library

- (Required if external is specified for reference_clock)

The path to the external library that you provide to the Sensor service to use as the reference time

for sensor timestamps. For example:

... reference_clock = external reference_clock_library = /usr/lib/libexternal_clock_example.so ...

You can configure each sensor to use a different external clock library, or multiple sensors to share one library. You may provide multiple external clock libraries. For more information, see “Using external clocks” in the Sensor Developer's Guide.

- return_mode

- (Required for lidar systems only) The type of reflection data to use.

The valid values are:

- strongest — Use the strongest reflection data.

- last — Use the last or farthest reflection data.

- dual — Use both the strongest and last reflection data. This doubles the data that's received by the Sensor service.

- rotations_per_minute

- (For lidar systems only) The number of 360-degree rotations that occur each minute.

- sample_frequency

- (Required for GPS and IMU systems only) The frequency at which new data is received from the GPS, in microseconds.

- udp_data_port

- (Required for lidar systems only) The UDP port to use for data from the lidar system. The default is 2368.

- udp_position_port

- (Required for lidar systems only) The UDP port to use for position information from the lidar system. The default is 8308.

- username

- (Required for IP cameras only) The user ID to use to log into an IP camera. This parameter also requires that you use the password parameter.

- vertical_fov

- (Optional) The vertical field of view of the sensor. This value is specified in degrees. If two fields of view are supported, you can specify both values delimited by a comma. The vertical axis is defined as the axis that runs from the top of the sensor to the bottom of the sensor.