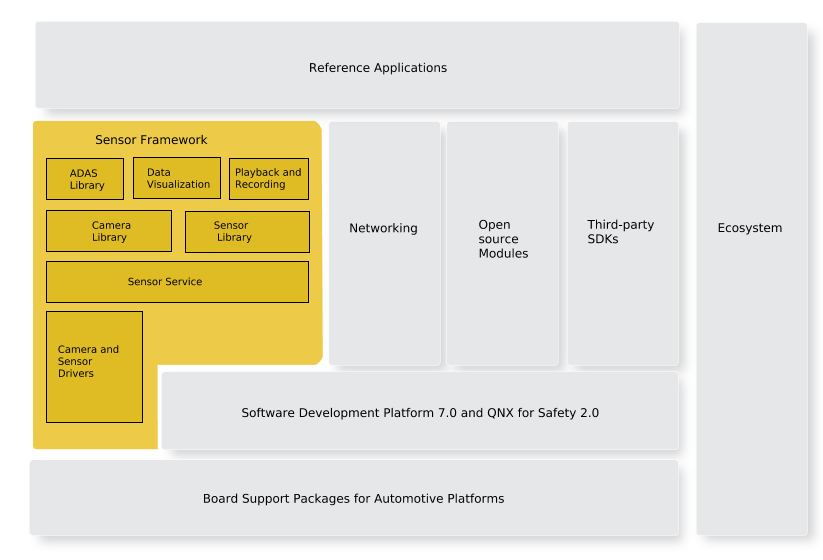

Sensor Framework

Figure 1. Sensor Framework and QNX Platform for ADAS Services.

- Sensor service

- C-based APIs and libraries

- camera and sensor drivers

- playback and recording capabilities (primarily for development use)

- data visualization capabilities

- publish and subscribe of sensor data

The Sensor service, takes care of loading the configuration for the cameras that you want to use on your system. You can modify that configuration file so that the Sensor service recognizes cameras that are connected to the target board. In addition to a sensor configuration file, available is an interim data configuration file. The interim data configuration file identifies the data units that correspond to interim data from your sensors and cameras. The Sensor service also allows you play prerecorded video.

- ADAS library

- This library allows ADAS applications to visualize data using, viewers and algorithms through API functions and an ADAS library configuration file. The JSON-formatted configuration file indicates how you intend to use the ADAS library and is used to initialize the viewers and algorithms you specify in the file. The ADAS library also provides the ability to perform synchronized recording from all configured sensors. Using the ADAS library configuration file, you can specify the sensor input to use, how to present the data using provided viewers, and algorithms to use. If you have custom algorithms you want to integrate for use with the ADAS library, hooks and an API is provided to let you do this. For more information, see the ADAS Developer's Guide.

- Sensor library

- This library allows applications to access all sensors that are available through the Sensor Framework. In the context of the Sensor library, a sensor can be an number of camera, lidar, radar, or GPS systems. The library allows you record the sensor data in either proprietary uncompressed format or using lossless compression (GZIP/LZ4 formats).

- This library also provides a framework to work with sensor data, which allows you to publish and subscribe to interim data. In addition, it provides hooks for you to provide sensor data to the library for sensors that aren't supported by the QNX Platform for ADAS. For more information, see the Sensor Developer's Guide.

- Camera library

- This library simplifies the process to interact with cameras connected to your system. You can use the Camera library to build various applications to stream live video, record video, and encode video. Encoding and recording is available as H.264 (MP4 file container) or uncompressed (MOV file container or proprietary UCV). In addition, an API is available to integrate camera drivers that are yet supported by the QNX Platform for ADAS. For more information, see the Camera Developer's Guide.

Playback and recording capabilities are primarily for development and demonstrative usage, but what it allows you to do is to capture data for a sensor (in the form of a data file) or a video for a camera. This is useful for developer testing as you can use the data file or video to test your applications.

Data visualization capabilities are provided by the Sensor Framework that permit you to take data from sensors and camera to create representation of what is “seen.” These visualization are useful for helping you to develop and test your ADAS applications as it provides visual representations of what is detected by the cameras and sensor connected to your target. These data visualizations can include video with overlays for detected objects and point cloud representations for sensors, such as lidar sensors. Point clouds provide a representation of the objects from the various sensors. Information retrieved is shown in a common 3-D coordinate system. For example, on a display, lidar firings can show up as points while data from other sensors show up as boxes.

Camera and Sensor drivers are provided with the QNX Platform for ADAS to support specific cameras and sensors. For a list of the supported hardware, see the Getting Started Overview. If the camera or sensor driver isn't supported, you can contact QNX Software Systems for help to write a custom driver or you can integrate using an API provided in the Camera and Sensor library.

The QNX Platform for ADAS provides sample applications. You can choose the vision-processing algorithms you want to use in your application. For more information about the vision-processing tested with our product, see the “Reference image vision-processing support” section in the Getting Started Overview guide.

Services for the QNX Platform for ADAS

The QNX Platform for ADAS provides services for you to build applications and build an ADAS system. Included with your system is the Sensor service, which takes care of loading the configuration for the cameras and sensors that you want to use on your system. The service uses a configuration file that you must modify so that the service recognizes cameras and sensors that are connected to the target board.

For more information about the Camera and SLM services, see the System Services guide for the QNX Platform for ADAS.

In addition to these services, there are new utilities that simplify how to build an image to load onto your target. For more information, see Working with Target Images.