![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |

![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |

|

This version of this document is no longer maintained. For the latest documentation, see http://www.qnx.com/developers/docs. |

Earlier in this manual, we described message passing in the context of a single node (see the section "QNX Neutrino IPC" in the microkernel chapter). But the true power of QNX Neutrino lies in its ability to take the message-passing paradigm and extend it transparently over a network of microkernels.

|

This chapter describes QNX Neutrino native networking (via the Qnet protocol). For information on TCP/IP networking, please refer to the next chapter. |

At the heart of QNX Neutrino native networking is the Qnet protocol, which is deployed as a network of tightly coupled trusted machines. Qnet lets these machines share their resources efficiently with little overhead. Using Qnet, you can use the standard OS utilities (cp, mv, and so on) to manipulate files anywhere on the Qnet network as if they were on your machine. In addition, the Qnet protocol doesn't do any authentication of remote requests; files are protected by the normal permissions that apply to users and groups. Besides files, you can also access and start/stop processes, including managers, that reside on any machine on the Qnet network.

The distributed processing power of Qnet lets you do the following tasks efficiently:

Moreover, since Qnet extends Neutrino message passing over the network, other forms of IPC (e.g. signals, message queues, named semaphores) also work over the network.

To understand how network-wide IPC works, consider two processes that wish to communicate with each other: a client process and a server process (in this case, the serial port manager process). In the single-node case, the client simply calls open(), read(), write(), etc. As we'll see shortly, a high-level POSIX call such as open() actually entails message-passing kernel calls "underneath" (ConnectAttach(), MsgSend(), etc.). But the client doesn't need to concern itself with those functions; it simply calls open().

fd = open("/dev/ser1",O_RDWR....); /*Open a serial device*/

Now consider the case of a simple network with two machines -- one contains the client process, the other contains the server process.

A simple network where the client and server reside on separate machines.

The code required for client-server communication is identical to the code in the single-node case, but with one important exception: the pathname. The pathname will contain a prefix that specifies the node that the service (/dev/ser1) resides on. As we'll see later, this prefix will be translated into a node descriptor for the lower-level ConnectAttach() kernel call that will take place. Each node in the network is assigned a node descriptor, which serves as the only visible means to determine whether the OS is running as a network or standalone.

For more information on node descriptors, see the Transparent Distributed Networking via Qnet chapter of the Programmer's Guide.

When you run Qnet, the pathname space of all the nodes in your Qnet network is added to yours. Recall that a pathname is a symbolic name that tells a program where to find a file within the directory hierarchy based at root (/).

The pathname space of remote nodes will appear under the prefix /net (the directory created by the Qnet protocol manager, npm-qnet.so, by default).

For example, remote node1 would appear as:

/net/node1/dev/socket /net/node1/dev/ser1 /net/node1/home /net/node1/bin ....

So with Qnet running, you can now open pathnames (files or managers) on other remote Qnet nodes, just as you open files locally on your own node. This means you can access regular files or manager processes on other Qnet nodes as if they were executing on your local node.

Recall our open() example above. If you wanted to open a serial device on node1 instead of on your local machine, you simply specify the path:

fd = open("/net/node1/dev/ser1",O_RDWR...); /*Open a serial device on node1*/

For client-server communications, how does the client know what node descriptor to use for the server?

The client uses the filesystem's pathname space to "look up" the server's address. In the single-machine case, the result of that lookup will be a node descriptor, a process ID, and a channel ID. In the networked case, the results are the same -- the only difference will be the value of the node descriptor.

| If node descriptor is: | Then the server is: |

|---|---|

| 0 (or ND_LOCAL_NODE) | Local (i.e. "this node") |

| Nonzero | Remote |

The practical result in both the local and networked case is that when the client connects to the server, the client gets a file descriptor (or connection ID in the case of kernel calls such as ConnectAttach()). This file descriptor is then used for all subsequent message-passing operations. Note that from the client's perspective, the file descriptor is identical for both the local and networked case.

Let's return to our open() example. Suppose a client on one node (lab1) wishes to use the serial port (/dev/ser1) on another node (lab2). The client will effectively perform an open() on the pathname /net/lab2/dev/ser1.

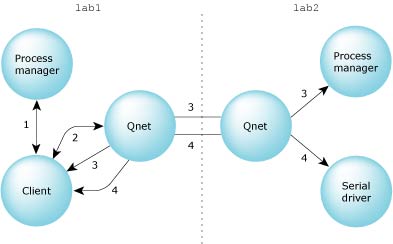

The following diagram shows the steps involved when the client open()'s /net/lab2/dev/ser1:

A client-server message pass across the network.

Here are the interactions:

Since the native network manager (npm-qnet) has taken over the entire /net namespace, the process manager returns a redirect message, saying that the client should contact the local network manager for more information.

The local network manager then replies with another redirect message, giving the node descriptor, process ID, and channel ID of the process manager on node lab2 -- effectively deferring the resolution of the request to node lab2.

The process manager on node lab2 returns another redirect, this time with the node descriptor, channel ID, and process ID of the serial driver on its own node.

After this point, from the client's perspective, message passing to the connection ID is identical to the local case. Note that all further message operations are now direct between the client and server.

The key thing to keep in mind here is that the client isn't aware of the operations taking place; these are all handled by the POSIX open() call. As far as the client is concerned, it performs an open() and gets back a file descriptor (or an error indication).

|

In each subsequent name-resolution step, the request from the client is stripped of already-resolved name components; this occurs automagically within the resource manager framework. This means that in step 2 above, the relevant part of the request is lab2/dev/ser1 from the perspective of the local network manager. In step 3, the relevant part of the request has been stripped to just dev/ser1, because that's all that lab2's process manager needs to know. Finally, in step 4, the relevant part of the request is simply ser1, because that's all the serial driver needs to know. |

In the examples shown so far, remote services or files are located on known nodes or at known pathnames. For example, the serial port on lab1 is found at /net/lab1/dev/ser1.

GNS allows you to locate services via an arbitrary name wherever the service is located, whether on the local system or on a remote node. For example, if you wanted to locate a modem on the network, you could simply look for the name "modem." This would cause the GNS server to locate the "modem" service, instead of using a static path such as /net/lab1/dev/ser1. The GNS server can be deployed such that it services all or a portion of your Qnet nodes. And you can have redundant GNS servers.

As mentioned earlier, the pathname prefix /net is the most common name that npm-qnet uses. In resolving names in a network-wide pathname space, the following terms come into play:

The following resolvers are built into the network manager:

Quality of Service (QoS) is an issue that often arises in high-availability networks as well as realtime control systems. In the Qnet context, QoS really boils down to transmission media selection -- in a system with two or more network interfaces, Qnet will choose which one to use according to the policy you specify.

|

If you have only a single network interface, the QoS policies don't apply at all. |

Qnet supports transmission over multiple networks and provides the following policies for specifying how Qnet should select a network interface for transmission:

To fully benefit from Qnet's QoS, you need to have physically separate networks. For example, consider a network with two nodes and a hub, where each node has two connections to the hub:

Qnet and a single network.

If the link that's currently in use fails, Qnet detects the failure, but doesn't switch to the other link because both links go to the same hub. It's up to the application to recover from the error; when the application reestablishes the connection, Qnet switches to the working link.

Now, consider the same network, but with two hubs:

Qnet and physically separate networks.

If the networks are physically separate and a link fails, Qnet automatically switches to another link, depending on the QoS that you chose. The application isn't aware that the first link failed.

Let's look in more detail at the QoS policies.

Qnet decides which links to use for sending packets, depending on current load and link speeds as determined by io-net. A packet is queued on the link that can deliver the packet the soonest to the remote end. This effectively provides greater bandwidth between nodes when the links are up (the bandwidth is the sum of the bandwidths of all available links), and allows a graceful degradation of service when links fail.

If a link does fail, Qnet will switch to the next available link. This switch takes a few seconds the first time, because the network driver on the bad link will have timed out, retried, and finally died. But once Qnet "knows" that a link is down, it will not send user data over that link. (This is a significant improvement over the QNX 4 implementation.)

While load-balancing among the live links, Qnet will send periodic maintenance packets on the failed link in order to detect recovery. When the link recovers, Qnet places it back into the pool of available links.

|

The loadbalance QoS policy is the default. |

With this policy, you specify a preferred link to use for transmissions. Qnet will use only that one link until it fails. If your preferred link fails, Qnet will then turn to the other available links and resume transmission, using the loadbalance policy.

Once your preferred link is available again, Qnet will again use only that link, ignoring all others (unless the preferred link fails).

You use this policy when you want to lock transmissions to only one link. Regardless of how many other links are available, Qnet will latch onto the one interface you specify. And if that exclusive link fails, Qnet will NOT use any other link.

Why would you want to use the exclusive policy? Suppose you have two networks, one much faster than the other, and you have an application that moves large amounts of data. You might want to restrict transmissions to only the fast network in order to avoid swamping the slow network under failure conditions.

You specify the QoS policy as part of the pathname. For example, to access /net/lab2/dev/ser1 with a QoS of exclusive, you could use the following pathname:

/net/lab2~exclusive:en0/dev/ser1

The QoS parameter always begins with a tilde (~) character. Here we're telling Qnet to lock onto the en0 interface exclusively, even if it fails.

You can set up symbolic links to the various "QoS-qualified" pathnames:

ln -sP /net/lab2~preferred:en1 /remote/sql_server

This assigns an "abstracted" name of /remote/sql_server to the node lab2 with a preferred QoS (i.e. over the en1 link).

|

You can't create symbolic links inside /net because Qnet takes over that namespace. |

Abstracting the pathnames by one level of indirection gives you multiple servers available in a network, all providing the same service. When one server fails, the abstract pathname can be "remapped" to point to the pathname of a different server. For example, if lab2 failed, then a monitoring program could detect this and effectively issue:

rm /remote/sql_server ln -sP /net/lab1 /remote/sql_server

This would remove lab2 and reassign the service to lab1. The real advantage here is that applications can be coded based on the abstract "service name" rather than be bound to a specific node name.

Let's look at a few examples of how you'd use the network manager.

|

The QNX Neutrino native network manager npm-qnet is actually a shared object that installs into the executable io-net. |

If you're using QNX Neutrino on a small LAN, you can use just the default ndp resolver. When a node name that's currently unknown is being resolved, the resolver will broadcast the name request over the LAN, and the node that has the name will respond with an identification message. Once the name's been resolved, it's cached for future reference.

Since ndp is the default resolver when you start npm-qnet.so, you can simply issue commands like:

ls /net/lab2/

If you have a machine called "lab2" on your LAN, you'll see the contents of its root directory.

|

For security reasons, you should have a firewall set up on your network before connecting to the Internet. For more information, see the section "Setting up a firewall" in the chapter Securing Your System in the User's Guide. |

Qnet uses DNS (Domain Name System) when resolving remote names. To use npm-qnet.so with DNS, you specify this resolver on mount's command line:

mount -Tio-net -o"mount=:,resolve=dns,mount=.com:.net:.edu" /lib/dll/npm-qnet.so

In this example, Qnet will use both its native ndp resolver (indicated by the first mount= command) and DNS for resolving remote names.

Note that we've specified several types of domain names (mount=.com:.net:.edu) as mountpoints, simply to ensure better remote name resolution.

Now you could enter a command such as:

ls /net/qnet.qnx.com/repository

and you'd get a listing of the repository directory at the qnet.qnx.com site.

In most cases, you can use standard QNX drivers to implement Qnet over a local area network or to encapsulate Qnet messages in IP (TCP/IP) to allow Qnet to be routed to remote networks. But suppose you want to set up a very tightly coupled network between two CPUs over a super-fast interconnect (e.g. PCI or RapidIO)?

You can easily take advantage of the performance of such a high-speed link, because Qnet can talk directly to your hardware driver. There's no io-net layer in this case. All you need is a little code at the very bottom of the Qnet layer that understands how to transmit and receive packets. This is simple, thanks to a standard internal API between the rest of Qnet and this very bottom portion, the driver interface.

Qnet already supports different packet transmit/receive interfaces, so adding another is reasonably straightforward. Qnet's transport mechanism (called "L4") is quite generic, and can be configured for different size MTUs, for whether or not ACK packets or CRC checks are required, etc., to take full advantage of your link's advanced features (e.g. guaranteed reliability).

A Qnet software development kit is available to help you write custom drivers and/or modify Qnet components to suit your particular application.

![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |