VIRTIO is a convenient and popular standard for implementing vdevs; using VIRTIO vdevs can reduce virtualization overhead.

QNX hypervisors currently support the VIRTIO 1.1 specification. The benefits of VIRTIO include:

- Implementation – no changes are required to Linux or Android kernels; you can use the default VIRTIO 1.1 kernel modules.

- Efficiency – VIRTIO uses virtqueues that efficiently collect data for transport to and from the shared device.

- Serialized queues – the underlying hardware can handle requests in a serialized fashion, managing the priorities of requests from multiple guests (i.e., priority-based sharing).

- Guest performance – even though the queues to the shared hardware are serialized, the guest can create multiple queue requests without blocking; the guest is blocked only when it explicitly needs to wait for completion (e.g., a call to flush data).

For more information about VIRTIO, including a link to the latest specification, see OASIS Virtual I/O Device (VIRTIO) TC on the Organization for the Advancement of Structured Information Standards (OASIS) web site; and Edouard Bugnion, Jason Nieh and Dan Tsafrir, Hardware and Software Support for Virtualization (Morgan & Claypool, 2017), pp. 96-100.

Implementing a VIRTIO vdev

For this discussion, we will examine an implementation of VIRTIO using virtio-blk (block device support for filesystems). Note that:

- QNX hypervisors support virtio-blk interfaces that are compatible with VIRTIO 1.1; so long as the guest supports this standard, this implementation requires no changes to the guest.

- The VIRTIO 1.1 specification stipulates that the guest creates the virtqueues, and that they exist in the guest's address space. Thus, the guest controls the virtqueues.

The hypervisor uses two mechanisms specific to its implementation of the virtio-blk device:

- a file descriptor (fd) – this refers to the device being mapped by virtio-blk. The qvm process instance for the VM hosting the guest creates one fd per virtio-blk interface. Each fd connects the qvm process instance to the QNX io-blk driver in the hypervisor host.

- I/O vector (iov) data transport – the vdev uses an iov structure to move data from virtqueues to the hardware driver

For more information about what to do in a guest when implementing VIRTIO, see “Discovering and connecting VIRTIO devices” in the “Using Virtual Devices, Networking, and Memory Sharing” chapter.

Guest-to-hardware communication with virtqueues

Assuming a Linux guest, the virtio-blk implementation in a QNX hypervisor system works as follows:

A VM is configured with a virtio-blk vdev.

This vdev includes a delayed option that can be used to reduce boot times from a cold boot. It lets the VM launch and the guest start without confirming the presence of the hardware device. For example, the guest can start without the underlying block device specified by the virtio-blk hostdev being available (see vdev virtio-blk in the “Virtual Device Reference” chapter).

The qvm process instance hosting the guest reads its configuration file and loads the vdev-virtio-blk.so.

This vdev's hostdev (host device) option specifies the mountpoint/file path that will be opened and passed to the guest as /dev/vda. For example, the hostdev option can pass the path /dev/hd1t131 to the guest as /dev/vda. You can specify multiple hostdev options for a guest.

- The qvm process hosting the guest launches, and the guest starts.

- After the guest opens the locations specified by the vdev's hostdev options, file descriptors refer to each of these locations. The qvm process instance owns these file descriptors, but the QNX hypervisor microkernel manages them.

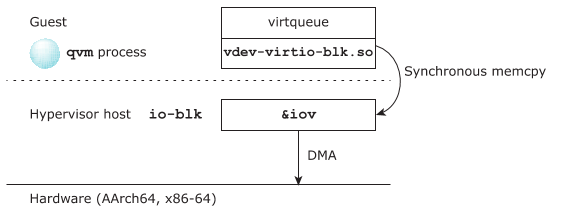

Figure 1. Data is passed from virtqueues in the guest to the hardware. The guest and the qvm process share the same address space.

The guest can now read and write to hardware. For example, on a Linux application read:

- The io-blk driver pulls blocks either from disk cache or from hardware DMA into iov data structures.

- The iov data structures are copied to virtqueues using synchronous memcpy(), as shown in the figure above.

- The Linux virtio_blk kernel module provides virtqueue data to the application.

Similarly, on a Linux application write:

- The Linux virtio_blk kernel module fills virtqueues.

- On a flush or kick operation, the qvm process instance uses synchronous memcpy() to copy the virtqueue data directly to the iov data structures in the multi-threaded io-blk driver.

- The io-blk driver writes to disk (DMA of iov directly to disk).

For both reading and writing, the Linux filesystem cache may be used.