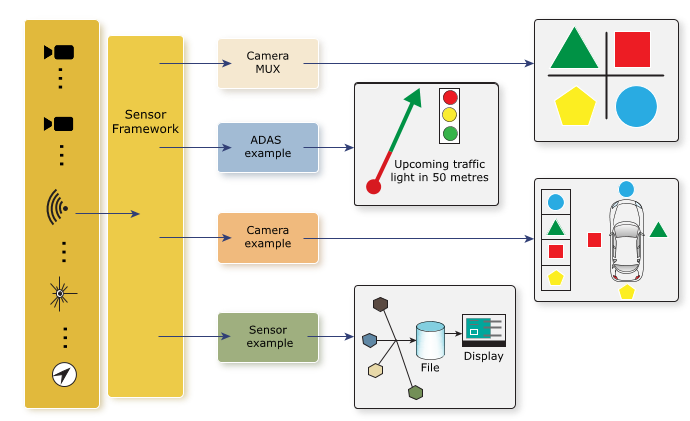

The Sensor Framework for QNX SDP lets you create many types of applications for your embedded system.

Applications can use the Camera library to take the video stream from a camera

and then use vision algorithms to stitch the images together to create a

surround view of the physical environment or to identify objects in the video, such as traffic lights,

other vehicles, or pedestrians.

Using the Sensor library, applications can gather data from multiple sensors.

Using the ADAS library, they can visualize sensor data using a camera viewer or point cloud viewer,

or overlay text, rectangles, or images over the data, or apply vision algorithms to the data to overlay

track information on the viewers.

Figure 1. Sample applications that use the Sensor Framework

Figure 1. Sample applications that use the Sensor Framework

For more information, see the Camera Developer's Guide, Sensor Developer's Guide, ADAS Library Developer's Guide, and the Sensor Framework Services Guide.

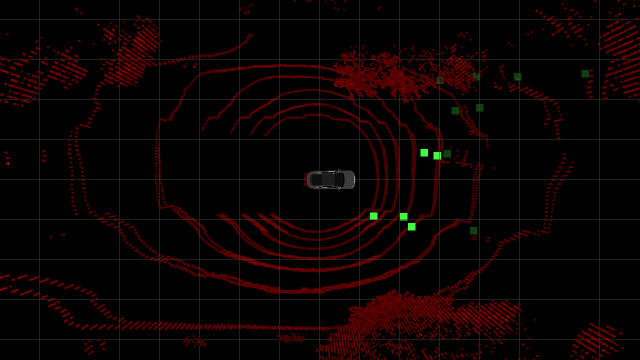

For example, you can build an application that runs at startup to provide feeds

from sensors and cameras connected to the system. Included on most of the provided reference images

is the ADAS example application, which boots immediately and shows an image from various sensors

representing the vehicle and its surroundings. The following example shows a camera feed and a data visualization

with overlays (boxes) that highlight traffic lights and represent the vehicle's surroundings:

Figure 2. Front view running on ADAS example application

Figure 3. Point cloud showing multiple sensors for a bird's eye view

Figure 2. Front view running on ADAS example application

Figure 3. Point cloud showing multiple sensors for a bird's eye view

In addition to the ADAS example application, there are other

sample applications available on the reference images (depending on the hardware platform), such as:

- Sensor example, which demonstrates how to use the Sensor library

- Camera example, which demonstrates how to use the Camera library

- Camera MUX, which shows a grid (e.g., 2x2 or 3x3) of different views

For more information about these sample applications, see the “Run Sample Applications” chapter in the Getting Started guide for the hardware platform you want to use.