The smmuman service is required to support pass-through DMA devices in a QNX Hypervisor system.

In a hypervisor system, the smmuman service is required, not just to program the DMA device memory regions and permissions into the board IOMMU/SMMUs, but also to manage guest-physical memory to host-physical memory translations and access for DMA devices passed through to a guest running in a hypervisor VM (see “SMMUMAN in a QNX Hypervisor guest” in the “Architecture” chapter).

Each VM (qvm process instance) in the hypervisor host configures the smmuman service to program the board IOMMU/SMMUs to limit the host-physical memory to which DMA devices passed through to its guest have access. Any DMA device passed-through to a guest is limited to the host-physical memory region(s) assigned to that VM in its configuration (see the QNX Hypervisor User's Guide).

This configuration protects the hypervisor host and guests running in other hypervisor VMs from errant DMA devices in the guest, but it doesn't protect the guest from its own DMA devices (DMA devices passed through to that guest).

The guest must configure its own smmuman service with the memory regions and permissions it requires for each pass-through DMA device it controls. The smmuman service running in the guest uses the smmu virtual device (vdev-smmu.so) to pass on these memory regions and permissions to the smmuman service running in the host, which in turn programs the board IOMMU/SMMUs.

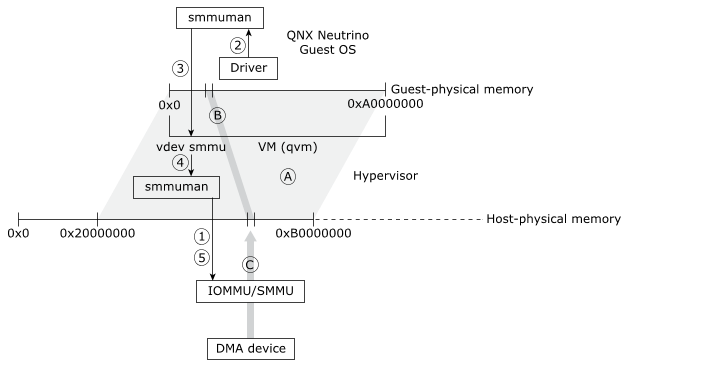

The figure below illustrates how the smmuman service is implemented in a QNX Hypervisor guest to constrain a pass-through DMA device to its assigned memory region:

Figure 1. The smmuman service running in a guest uses the

smmuman service running in the hypervisor.

Figure 1. The smmuman service running in a guest uses the

smmuman service running in the hypervisor.For simplicity, the diagram shows a single guest with a single pass-through DMA device:

-

In the hypervisor host, the VM (qvm process instance) that will host the guest uses the smmuman service to program the IOMMU/SMMU with the memory range and permissions (A) for the entire physical memory regions that it will present to its guest (e.g., 0x20000000 to 0xB0000000).

This protects the hypervisor host and other guests if DMA devices passed through to the guest erroneously or maliciously attempt to access their memory. It doesn't prevent DMA devices passed through to the guest from improperly accessing memory assigned to that guest, however.

- In the guest, the driver for the pass-through DMA device uses the SMMUMAN client-side API to program the memory allocation and permissions (B) it needs into the smmuman service running in the guest.

- The guest's smmuman service programs the smmu virtual device running in its hosting VM as it would an IOMMU/SMMU in hardware: it programs into the smmu virtual device the DMA device's memory allocation and permissions.

- The smmu virtual device uses the client-side API for the smmuman service running in the hypervisor host to ask it to program the memory allocation and permissions (B) requested by the guest's smmuman service into the board's IOMMU/SMMU(s).

- The host's smmuman service programs the pass-through DMA device's memory allocation and permissions (B) into the board IOMMU/SMMU.

The DMA device's access to memory (C) is now limited to the region and permissions requested by the DMA device driver in the guest (B), and the guest OS and other components are protected from this device erroneously or maliciously accessing their memory.

You can't use startup mappings in the configuration for the smmuman service running in the hypervisor host to map DMA devices that are passed through to a guest in a hypervisor VM. You can use startup mappings in the configuration for the smmuman service running in a guest, however.