Composition is the process of combining multiple content sources together into a single image.

Screen uses a composition strategy that's optimal for the current scene while taking memory bandwidth and available pipelines into consideration. When multiple pipelines and buffers are supported by the device driver, Screen takes advantage of these hardware capabilities to use each pipeline and to combine the pipelines at display time. For applications that require complex graphical operations, you can also use hardware-accelerated options such as OpenGL ES and/or bit-blitting hardware. Only when your platform does not support any hardware-accelerated options, will Screen then resort to using the CPU to perform composition.

The following forms of transparency are used for composition:

- Destination view port

- Allows any content on layers below to be displayed. This transparency mode has an implicit transparency in that anything outside the specified view port is transparent.

- Source chroma

- Allows source pixels of a particular color to be interpreted as transparent. Unlike a destination view port, source chroma allows for transparent pixels within the buffer.

- Source alpha blending

- Allows pixel blending based on the alpha channel of the source pixel. Source alpha blending is one of the most powerful forms of transparency because it can blend in the range from fully opaque to fully transparent.

- Hardware composition

- Composes all visible (enabled) pipelines of the display controller and then displays them.

- Screen composition

- Composes mutliple elements that are combined into a single buffer that is associated to a pipeline and displayed. The composition is handled by the Composition Manager of Screen.

Hardware composition

Hardware composition capabilities are constrained by the display controller. Therefore, they vary from platform to platform.

All visible (enabled) pipelines are composed and displayed. Each layer, at the time it is displaying, has only one buffer associated to it.

The buffer belongs to a window that can be displayed directly on a pipeline. This window is considered autonomous because no composition was performed on the buffer by the Composition Manager. For a window to be displayed autonomously on a pipeline, this window buffer's format must be supported by its associated pipeline.

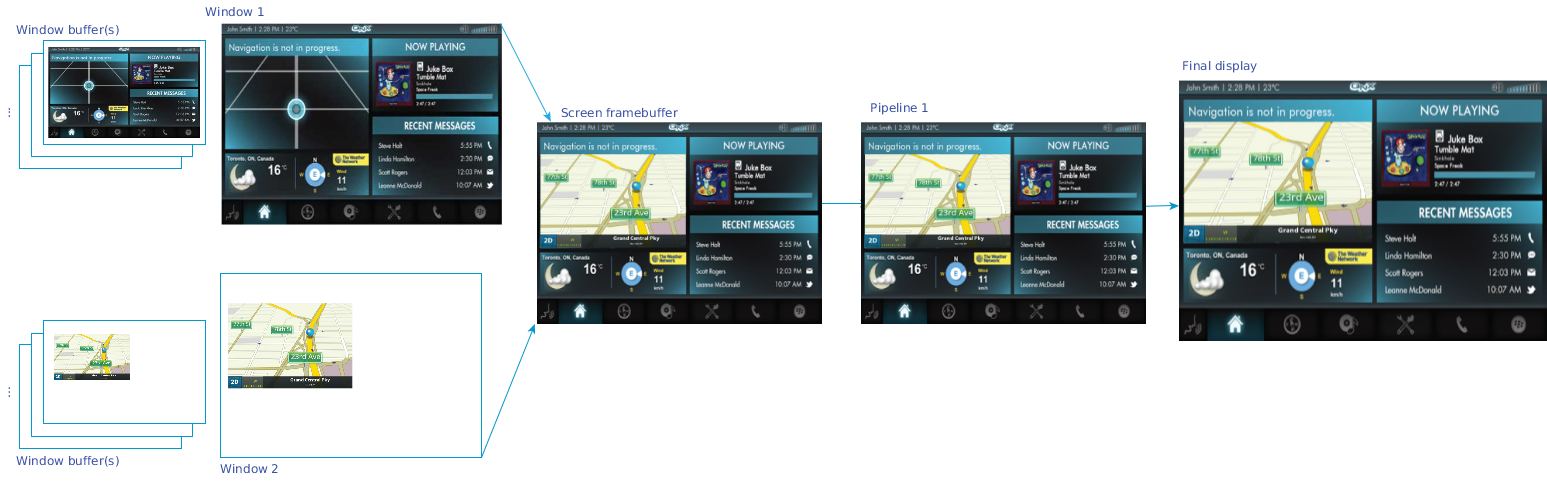

Figure 1.

An example of hardware composition with two windows

and two supported pipelines

Figure 1.

An example of hardware composition with two windows

and two supported pipelines

This hardware composition example shows two windows. Each window posts a different buffer and binds to a different pipeline. The output from both pipelines is combined and fed into the associated display port and then onto the display hardware.

- have the correct Screen configuration. Determine the pipelines that are on your display controller and choose the pipeline on which you want to display a window. The supported pipelines on your wfd device are configured in your graphics.conf file.

- use screen_set_window_property_iv() to set SCREEN_PROPERTY_PIPELINE to one of the supported pipelines you have configured in graphics.conf.

- use screen_set_window_property_iv() to set the SCREEN_USAGE_OVERLAY bit of your SCREEN_PROPERTY_USAGE window property.

Screen composition

Many of the composition capabilities that are used in hardware composition can be achieved in Screen composition by the Composition Manager.

When your platform doesn't have hardware capabilities to support a sufficient number of pipelines to compose a number of required elements, or to support a particular behavior, composition can still be achieved by the Composition Manager, an internal component of Screen.

The Composition Manager combines multiple window buffers into one resultant buffer. This is the composite buffer (Screen framebuffer).

Figure 2. An example of Screen

composition with two windows and only one supported pipeline

Figure 2. An example of Screen

composition with two windows and only one supported pipeline

This Screen composition example shows two windows; each window posts a different image. Those images are composed into one composite framebuffer, which binds to the single pipeline. This output is then fed into the associated display port and then onto the display hardware.

For Screen composition, don't set the SCREEN_PROPERTY_PIPELINE window property or the SCREEN_USAGE_OVERLAY bit in your SCREEN_PROPERTY_USAGE window property.

You can also maximize the advantages of both hardware and Screen composition capabilities. What this means is that you can combine multiple windows into one composite buffer, bind this buffer to a pipeline, and still take advantage of hardware capabilities to combine output from multiple pipelines.

Figure 3. An example of both Screen

and hardware composition with three windows, one

composite buffer, and two supported pipelines

Figure 3. An example of both Screen

and hardware composition with three windows, one

composite buffer, and two supported pipelines

This composition example shows three windows. The first window posts and binds to one specific pipeline. The second and third windows post to a framebuffer where the buffers from these windows are combined. The framebuffer binds to the second pipeline. The output from both pipelines is combined and fed into the associated display port and then onto the display hardware.

For this composition, similar to the hardware composition, you must have the correct Screen configuration and the appropriate window properties set.

Pipeline ordering and the z-ordering of windows on a layer are applied independently of each other.

Pipeline ordering takes precedence over z-ordering operations in Screen. Screen does not have control over the ordering of hardware pipelines. Screen windows are always arranged in the z-order that is specified by the application.

If your application manually assigns pipelines, you must ensure that the z-order values make sense with regard to the pipeline order of the target hardware. For example, if you assign a high z-order value to a window (meaning it is to be placed in the foreground), then you must make a corresponding assignment of this window to a top layer pipeline. Otherwise the result may not be what you expect, regardless of the z-order value.

Comparing composition types

Both hardware and Screen composition types each have multiple advantages and disadvantages. Some are very subtle and sometimes depend on the rate at which the window's contents are refreshed.

| Hardware composition | Screen composition | |

|---|---|---|

| Advantages |

|

|

| Disadvantages |

|

|