![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |

![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |

|

This version of this document is no longer maintained. For the latest documentation, see http://www.qnx.com/developers/docs. |

This chapter includes:

The QNX Neutrino network subsystem consists of a process called io-net that loads a number of shared objects. These shared objects typically consist of a protocol stack (such as npm-tcpip.so), a network driver, and other optional components such as filters and converters. These shared objects are arranged in a hierarchy, with the end user on the top, and hardware on the bottom.

The io-net component can load one or more protocol interfaces and drivers.

This document focuses on writing new network drivers, although most of the information applies to writing any module for io-net.

As indicated in the diagram, the shared objects that io-net loads don't communicate directly. Instead, each shared object registers a number of functions that io-net calls, and io-net provides functions that the shared object calls.

Each shared object provides one or more of the following types of service:

Note that these terms are relative to io-net and don't encompass any non-io-net interactions.

For example, a network card driver (while forming an integral part of the communications flow) is viewed only as an up producer as far as io-net is concerned -- it doesn't produce anything that io-net interacts with in the downward direction, even though it actually transmits the data originated by an upper module to the hardware.

A producer can be an up producer, a down producer, or both. For example, the TCP/IP module produces both types (up and down) of packets.

When a module is an up producer, it may pass packets on to modules above it. Whether a packet originated at an up producer, or that producer received the packet from another up producer below it, from the next recipient's point of view, the packet came from the up producer directly below it.

Only an up or down producer can connect with converters. A converter can't connect directly to another converter.

For example, in a PPP (Point-to-Point Protocol) over Ethernet implementation, we already have an IP producer (the stack) and an IP-PPP converter (the npm-pppmgr.so module). A PPP-EN converter is needed to convert a PPP frame into an Ethernet frame. However, since two converters can't be directly connected to each other, a PPP producer is needed to bridge converters.

The npm-pppoe.so module registers with io-net twice: once as a PPP producer, and a second time as a PPP-EN converter. This PPP producer serves as a "dummy" module and serves only to pass packets. This way the packets can go from IP producer (npm-tcpip.so) to EN producer (devn-xxx.so)

This complete sequence of events is as follows: the packets run a chain from an IP producer (npm-tcpip.so) to an IP-PPP converter (the npm-pppmgr.so module) to the dummy PPP producer (npm-pppoe.so) to the PPP-EN converter (npm-pppoe.so) to an EN producer (devn-xxx.so), to the Internet.

The io-net module exists in a multi-thread environment which does not create any threads when loading a module unless a module using pthread_create() creates threads on its own.

If a module is using pthread_create(), the thread is an execution entity when the second thread in io-net tries to send an IP packet through the Point-to-Point over Ethernet (PPPoE) interface. The thread will call io-net's tx_down() function, which calls into npm-pppmgr.so's rx_down() function. After this function converts an IP packet to a PPP frame, it calls io-net's tx_down(), then that function calls into rx_down() of the dummy PPP producer in npm-pppoes.so.

From the perspective of the functionality of a module, the module is exposed to all io-net function calls, which could allow two functions to access the same data structures. It's important that the module is protected using a mutex or another synchronous object.

When calling the initialize function on a module, io-net passed in a dispatch handler (dpp). This dispatcher handles all the path names io-net created (/dev/io-net/*). There is a thread pool associated with this function. The maximum number of threads in the thread pool is controlled by the -t option of io-net. As the thread pool is created and destroyed dynamically, it's common for pidin -p io-net to have noncontinuous thread IDs.

A module can create its own path name with the dpp, and have its private resource manager. For example, the npm-pppmgr.so module can attach a /dev/socket/pppmgr call which gives out statistics while being queried.

When you start io-net from the command line, you tell it which drivers and protocols to load:

$ io-net -del900 verbose -pttcpip if=en0:11.2 &

This causes io-net to load the devn-el900.so Ethernet driver and the tiny TCP/IP protocol stack. The verbose and if=en0:11.2 options are suboptions that are passed to the individual components.

Alternatively, you can use the mount and umount commands to start and stop modules dynamically. The previous example could be rewritten as:

$ io-net & $ mount -Tio-net -overbose devn-el900.so $ mount -Tio-net -oif=en0:11.2 npm-ttcpip.so

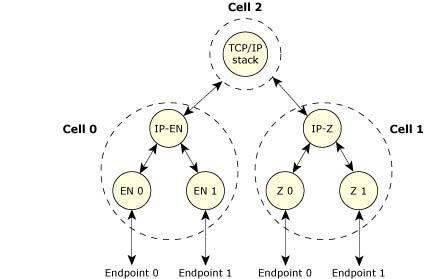

Regardless of the way that you've started it, here's the "big picture" that results:

Big picture of io-net.

In the diagram above, we've shown io-net as the "largest" entity. This was done simply to indicate that io-net is responsible for loading all the other modules (as shared objects), and that it's the one that "controls" the operation of the entire protocol stack.

Let's look at the hierarchy, from top to bottom:

As far as Neutrino's namespace is concerned, the following entries exist:

At this point, you could open() /dev/io-net/en0, for example, and perform devctl() operations on it -- this is how the nicinfo command gets the Ethernet statistics from the driver.

Here's another view of io-net, this time with two different protocols at the bottom:

Cells and endpoints.

As you can see, there are three levels in this hierarchy. At the topmost level, we have the TCP/IP stack. As described earlier, it's considered to be a down producer (it doesn't produce or pass on anything for modules above it.)

|

In reality, the stack probably registers as both an up and down producer. This is permitted by io-net to facilitate the stacking of protocols. |

When the TCP/IP stack registered, it told io-net that it produces packets in the downward direction of type IP -- there's no other binding between the stack and its drivers. When a module registers, io-net assigns it a cell number, 2 in this case.

Joining the stack (down producer) to the drivers (up producers), we have two converter modules. Take the converter module labeled IP-EN as an example. When this module registered as type _REG_CONVERTOR, it told io-net that it takes packets of type IP on top and packets of type EN on the bottom.

Again, this is the only binding between the IP stack and its lower level drivers. The IP-EN portion, along with its Ethernet drivers, is called cell 0 and the IP-Z portion, along with its Z-protocol drivers is called cell 1 as far as io-net is concerned.

The purpose of the intermediate converters is twofold:

Finally, on the bottom level of the hierarchy, we have two different Ethernet drivers and two different Z-protocol drivers. These are up producers from io-net's perspective, because they generate data only in the upward direction. These drivers are responsible for the low-level hardware details. As with the other components mentioned above, these components advertise themselves to io-net indicating the name of the service that they're providing, and that's what's used by io-net to "hook" all the pieces together.

Since all seven pieces are independent shared objects that are loaded by io-net when it starts up (or later, via the mount command), it's important to realize that the keys to the interconnection of all the pieces are:

The next thing we need to look at is the life cycle of a packet -- how data gets from the hardware to the end user, and back to the hardware.

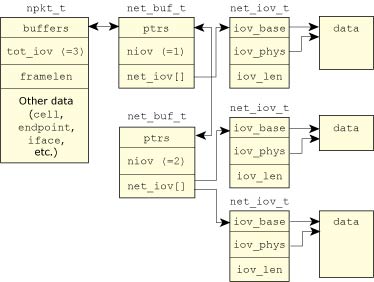

The main data structure that holds all packet data is the npkt_t data type. (For more information about the data structures described in this section, see the Network DDK API chapter.) The npkt_t structure maintains a tail queue of buffers that contain the packet's data.

A tail queue uses a pair of pointers, one to the head of the queue and the other to the tail. The elements are doubly linked; an arbitrary element can be removed without traversing the queue. New elements can be added before or after an existing element, or at the head or tail of the queue. The queue may be traversed only in the forward direction.

The buffers form a doubly-linked list, and are managed via the TAILQ macros from <sys/queue.h>:

Buffer data is stored in a net_buf_t data type. This data type consists of a list of net_iov_t structures, each containing a virtual (or base) address, physical address, and length, that are used to indicate one or more buffers:

Data structures associated with a packet.

The TAILQ macros let you step through the list of elements. The following code snippet illustrates:

net_buf_t *buf;

net_iov_t *iov;

int i;

// walk all buffers

for (buf = TAILQ_FIRST (&npkt -> buffers); buf;

buf = TAILQ_NEXT (buf, ptrs)) {

for (i = 0, iov = buf -> net_iov; i < buf ->

niov; i++, iov++) {

// buffer is : iov -> iov_base

// length is : iov -> iov_len

// physical addr is : iov -> iov_phys

}

}

We'll start with the downward direction (from the end user to the hardware). A message is sent from the end user (via the socket library), and arrives at the TCP/IP stack. The TCP/IP stack does whatever error checking and formatting it needs to do on the data. At some point, the TCP/IP stack sends a fully formed IP packet down io-net's hierarchy. No provision is made for any link-level headers, as this is the job of the converter module.

Since the TCP/IP stack and the other modules aren't bound to each other, it's up to io-net to do the work of accepting the packet from the TCP/IP stack and giving it to the converter module. The TCP/IP stack informs io-net that it has a packet that should be sent to a lower level by calling the tx_down() function within io-net. The io-net manager looks at the various fields in the packet and the arguments passed to the function, and calls the rx_down() function in the IP-EN converter module.

|

The contents of the packet aren't copied -- since all these modules (e.g. the TCP/IP stack and the IP module) are loaded as shared objects into io-net's address space, all that needs to be transfered between modules is pointers to the data (and not the data itself). |

Once the packet arrives in the IP-EN converter module, a similar set of events occurs as described above: the IP-EN converter module converts the packet to an Ethernet packet, and sends it to the Ethernet module to be sent out to the hardware. Note that the IP-EN converter module needs to add data in front of the packet in order to encapsulate the IP packet within an Ethernet packet.

Again, to avoid copying the packet data in order to insert the Ethernet encapsulation header in front of it, only the data pointers are moved. By inserting a net_buf_t at the start of the packet's queue, the Ethernet header can be prepended to the data buffer without actually copying the IP portion of the packet that originated at the TCP/IP stack.

In the upward direction, a similar chain of events occurs:

Note that in an upward-headed packet, data is never added to the packet as it travels up to the various modules, so the list of net_buf_t structures isn't manipulated. For efficiency, the arguments to io-net's tx_up() function (and correspondingly to a registered module's rx_up() function) include off and framelen_sub. These are used to indicate how much of the data within the buffer is of interest to the level to which it's being delivered.

For example, when an IP packet arrives over the Ethernet, there are 14 bytes of Ethernet header at the beginning of the buffer. This Ethernet header is of no interest to the IP module -- it's relevant only to the Ethernet and IP-EN converter modules. Therefore, the off argument is set to 14 to indicate to the next higher layer that it should ignore the first 14 bytes of the buffer. This saves the various levels in io-net from continually having to copy buffer data from one format to another.

The framelen_sub argument operates in a similar manner, except that it refers to the tail end of the buffer -- it specifies how many bytes should be ignored at the end of the buffer, and is used with protocols that place a tail-end encapsulation on the data.

See io-net in the Utilities Reference for more details.

The purpose of the network driver is to detect and initialize one or more NIC (Network Interface Controller) devices, and allow for transmission and reception of data via the NIC. Additional tasks typically performed by a network driver include link monitoring and statistics gathering.

The networking subsystem and io-net.

The network driver is loaded by io-net. This happens either when io-net starts, or later in response to a "mount" request.

A network driver must contain a global structure called io_net_dll_entry, of type io_net_dll_entry_t. The io-net process finds this structure by calling dlsym(). This structure must contain a function pointer to the driver's main initialization routine, which io-net calls when the driver has been loaded. This function is responsible for parsing the option string that was passed to the driver (if any), and detecting any network interface hardware, in accordance with the supplied options. For each NIC device that the driver detects, it creates a software instance of the interface, by allocating the necessary structures, and registering the interface with io-net. After it registers with io-net, the driver advertises its capabilities to the other components within the networking subsystem.

By default, the driver should attempt to detect and instantiate every NIC in the system that the driver supports. However, the driver may be requested to instantiate a specific NIC interface, via one or more driver options.

Sometimes, certain options are mandatory. For example, in the case of a non-PCI device, the driver may not be able to automatically determine the interrupt number and base address of the device. Also, on many embedded systems, their driver may not be able to determine the station (MAC) address of the device. In this case, the MAC address needs to be passed to the driver via an option.

After parsing the options, the driver knows how and where to look for NIC devices.

For a PCI device, the driver typically searches using pci_attach_device(). The driver searches based on the values specified via the vid, did, and pci options. If no options were specified, the driver will typically search based on a well-known internal list of PCI Vendor and Device IDs that correspond to the devices for which the driver was developed.

For non-PCI devices, the driver usually relies on a memory or I/O base address being specified in order to locate the NIC device. On certain systems, the driver may be able to find the device at well-known locations, without the need for the location to be specified via an option. The driver will then typically do some sanity checks to verify that an operational device indeed exists at the expected location.

Once it's been determined that a NIC device is present, the driver initializes and configures the interface. It's always a good idea for the driver to reset the device before proceeding, since the device could be in an unknown state (e.g. in the case of a previous incarnation of io-net terminating prematurely without getting a chance to shut off the device properly).

Next, the driver typically allocates some structures in order to store information about the device state. The normal practice is to allocate a driver-specific structure, whose layout is known only to the network driver. The driver may pass a pointer to this structure to other networking subsystem functions. When calls are made into the driver's entry points, the subsystem passes this pointer, so the driver always has access to any data associated with the device. It's a good idea for the driver to store any configuration-related data in the nic_config_t structure so that the driver can take advantage of more of the functionality in libdrvr. The nic_config_t structure can be included in the driver-specific structure.

Also, if higher-level software queries the driver for configuration information, it will expect the information to be in the format defined by the nic_config_t structure, so it's convenient to keep a copy of this structure around.

|

Avoid the use of global variables in your driver if possible. Referencing global variables is much slower than accessing data that resides in a structure that the system allocated (e.g. calloc()). |

Network drivers generally use an interrupt handler to receive notification of events such as packet reception. We strongly recommended that network drivers use the InterruptAttachEvent() call instead of InterruptAttach() to handle interrupts. In an RTOS, we must keep the amount of time spent in an interrupt handler to an absolute minimum so as not to negatively impact the overall realtime determinism of the system. Therefore, the type of operations performed by network drivers, such as copying packet data or traversing linked lists or iterating ring buffers, should be performed at process time.

The driver normally creates a thread during initialization, to handle events (such as interrupt events, timer events etc.). Note that you need to be extra careful, since multiple threads could simultaneously call into your driver. In addition, the driver's own event thread could be running, so it's very important that all data and device entities are protected (e.g. using mutexes). Make sure you are familiar with multi-threaded programming concepts before attempting to write a network driver.

Once the device is initialized and is ready to be made operational, the driver registers the interface with io-net. See the reg field of the io_net_self_t structure for details on how to register with io-net. When the driver registers, it provides various information to io-net, to allow the networking subsystem to call into the driver's various entry points.

After registering with io-net, the driver must advertise its capabilities to the networking subsystem. See the dl_advert field of the io_net_registrant_funcs_t structure for more details.

At this point, the device is ready to begin operation. The driver's entry points may now be called at any time to perform various tasks such as packet transmission. The driver may also call back into the networking subsystem, for example, to deliver packets that have been received from the medium.

![[Previous]](prev.gif) |

![[Contents]](contents.gif) |

![[Index]](keyword_index.gif) |

![[Next]](next.gif) |