The "Oversleeping: errors in delays" section explains the behavior of the sleep-related functions. Timers are similarly affected by the design of the PC hardware. For example, let's consider the following C code:

#include <assert.h>

#include <stdio.h>

#include <stdlib.h>

#include <sys/neutrino.h>

#include <sys/netmgr.h>

#include <sys/syspage.h>

int main( int argc, char *argv[] )

{

int pid;

int chid;

int pulse_id;

timer_t timer_id;

struct sigevent event;

struct itimerspec timer;

struct _clockperiod clkper;

struct _pulse pulse;

uint64_t last_cycles=-1;

uint64_t current_cycles;

float cpu_freq;

time_t start;

/* Get the CPU frequency in order to do precise time

calculations. */

cpu_freq = SYSPAGE_ENTRY( qtime )->cycles_per_sec;

/* Set our priority to the maximum, so we won't get disrupted

by anything other than interrupts. */

{

struct sched_param param;

int ret;

param.sched_priority = sched_get_priority_max( SCHED_RR );

ret = sched_setscheduler( 0, SCHED_RR, ¶m);

assert ( ret != -1 );

}

/* Create a channel to receive timer events on. */

chid = ChannelCreate( 0 );

assert ( chid != -1 );

/* Set up the timer and timer event. */

event.sigev_notify = SIGEV_PULSE;

event.sigev_coid = ConnectAttach ( ND_LOCAL_NODE,

0, chid, 0, 0 );

event.sigev_priority = getprio(0);

event.sigev_code = 1023;

event.sigev_value.sival_ptr = (void*)pulse_id;

assert ( event.sigev_coid != -1 );

if ( timer_create( CLOCK_REALTIME, &event, &timer_id ) == -1 )

{

perror ( "can't create timer" );

exit( EXIT_FAILURE );

}

/* Change the timer request to alter the behavior. */

#if 1

timer.it_value.tv_sec = 0;

timer.it_value.tv_nsec = 1000000;

timer.it_interval.tv_sec = 0;

timer.it_interval.tv_nsec = 1000000;

#else

timer.it_value.tv_sec = 0;

timer.it_value.tv_nsec = 999847;

timer.it_interval.tv_sec = 0;

timer.it_interval.tv_nsec = 999847;

#endif

/* Start the timer. */

if ( timer_settime( timer_id, 0, &timer, NULL ) == -1 )

{

perror("Can't start timer.\n");

exit( EXIT_FAILURE );

}

/* Set the tick to 1 ms. Otherwise if left to the default of

10 ms, it would take 65 seconds to demonstrate. */

clkper.nsec = 1000000;

clkper.fract = 0;

ClockPeriod ( CLOCK_REALTIME, &clkper, NULL, 0 ); // 1ms

/* Keep track of time. */

start = time(NULL);

for( ;; )

{

/* Wait for a pulse. */

pid = MsgReceivePulse ( chid, &pulse, sizeof( pulse ),

NULL );

/* Should put pulse validation here... */

current_cycles = ClockCycles();

/* Don't print the first iteration. */

if ( last_cycles != -1 )

{

float elapse = (current_cycles - last_cycles) /

cpu_freq;

/* Print a line if the request is 1.05 ms longer than

requested. */

if ( elapse > .00105 )

{

printf("A lapse of %f ms occurred at %d seconds\n",

elapse, time( NULL ) - start );

}

}

last_cycles = current_cycles;

}

}

The program checks to see if the time between two timer events is greater than 1.05 ms. Most people expect that given QNX Neutrino's great realtime behavior, such a condition will never occur, but it will, not because the kernel is misbehaving, but because of the limitation in the PC hardware. It's impossible for the OS to generate a timer event at exactly 1.0 ms; it will be .99847 ms. This has unexpected side effects.

Where's the catch?

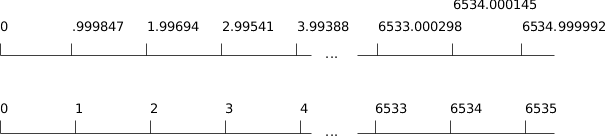

As described earlier in this chapter, there's a 153-nanosecond (ns) discrepancy between the request and what the hardware can do. The kernel timer manager is invoked every .999847 ms. Every time a timer fires, the kernel checks to see if the timer is periodic and, if so, adds the number of nanoseconds to the expected timer expiring point, no matter what the current time is. This phenomenon is illustrated in the following diagram:

Figure 1. Actual and expected timer expirations.

Figure 1. Actual and expected timer expirations.The first line illustrates the actual time at which timer management occurs. The second line is the time at which the kernel expects the timer to be fired. Note what happens at 6534: the next value appears not to have incremented by 1 ms, thus the event 6535 won't be fired!

For signal frequencies, this phenomenon is called a beat. When two signals of various frequencies are "added," a third frequency is generated. You can see this effect if you use your camcorder to record a TV image. Because a TV is updated at 60 Hz, and camcorders usually operate on a different frequency, at playback, you can often see a white line that scrolls in the TV image. The speed of that line is related to the difference in frequency between the camcorder and the TV.

In this case we have two frequencies, one at 1000 Hz, and the other at 1005.495 Hz. Thus, the beat frequency is 1.5 micro Hz, or one blip every 6535 milliseconds.

This behavior has the benefit of giving you the expected number of fired timers, on average. In the example above, after 1 minute, the program would have received 60000 fired timer events (1000 events /sec * 60 sec). If your design requires very precise timing, you have no other choice but to request a timer event of .999847 ms and not 1 ms. This can make the difference between a robot moving very smoothly or scratching your car.